A

Background:

I am a final year Mechanical Eng student in Cork IT (Ireland) I am doing a project for the Irish Road Bowling Assoc. Accuracy-training facility is in planning for construction. I am to design an automated scoring system. The score is mainly judged on accuracy (the highest point of accuracy is the middle on the lane). I am looking for guidance in research and advice from similar experiences.

Simplified it is a steel bowl,60mm-800gramms "rolling" on a tarmac lane at 31m/s

Problem:

TO sense the position of the bowl relative to the gutter(low accuracy requirement). I am considering/researching the following:\

-Analogue photoelectric sensor at the side of the lane

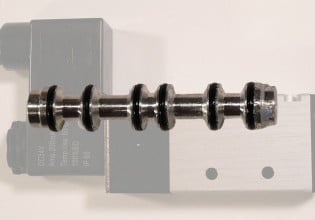

-An array Inductive Sensors across the width of the track, under the tarmac

-Machine vision, with points of contrast. to help isolate the image.

Q1-Any comments on limitations of these technologies and what issues I might run in to down the line?

Q2- If the cost "per sensing line" will be relatively low then there will be several lines per each of the 3 tracks. The information will be processed and fed back to the players (including a computation of the stopping distance). Should I use a PC or a dedicated processor? Any guidance (recommendations of texts) on this and DAQ would be greatly appreciated!

Thank you in advance!

I am a final year Mechanical Eng student in Cork IT (Ireland) I am doing a project for the Irish Road Bowling Assoc. Accuracy-training facility is in planning for construction. I am to design an automated scoring system. The score is mainly judged on accuracy (the highest point of accuracy is the middle on the lane). I am looking for guidance in research and advice from similar experiences.

Simplified it is a steel bowl,60mm-800gramms "rolling" on a tarmac lane at 31m/s

Problem:

TO sense the position of the bowl relative to the gutter(low accuracy requirement). I am considering/researching the following:\

-Analogue photoelectric sensor at the side of the lane

-An array Inductive Sensors across the width of the track, under the tarmac

-Machine vision, with points of contrast. to help isolate the image.

Q1-Any comments on limitations of these technologies and what issues I might run in to down the line?

Q2- If the cost "per sensing line" will be relatively low then there will be several lines per each of the 3 tracks. The information will be processed and fed back to the players (including a computation of the stopping distance). Should I use a PC or a dedicated processor? Any guidance (recommendations of texts) on this and DAQ would be greatly appreciated!

Thank you in advance!